Pandas Notebook 2, ATM350 Spring 2024

Contents

Pandas Notebook 2, ATM350 Spring 2024 ¶

Motivating Science Questions:¶

What was the daily temperature and precipitation at Albany last year?

What were the the days with the most precipitation?

Motivating Technical Question:¶

How can we use Pandas to do some basic statistical analyses of our data?

We’ll start by repeating some of the same steps we did in the first Pandas notebook.¶

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

sns.set()

file = '/spare11/atm350/common/data/climo_alb_2023.csv'

Display the first five lines of this file using Python’s built-in readline function

fileObj = open(file)

nLines = 5

for n in range(nLines):

line = fileObj.readline()

print(line)

DATE,MAX,MIN,AVG,DEP,HDD,CDD,PCP,SNW,DPT

2023-01-01,51,34,42.5,16.2,22,0,0.00,0.0,0

2023-01-02,51,29,40.0,13.9,25,0,0.00,0.0,0

2023-01-03,37,27,32.0,6.1,33,0,0.30,0.0,0

2023-01-04,43,37,40.0,14.3,25,0,0.08,0.0,0

df = pd.read_csv(file, dtype='string')

nRows = df.shape[0]

print ("Number of rows = %d" % nRows )

nCols = df.shape[1]

print ("Number of columns = %d" % nCols)

date = df['DATE']

date = pd.to_datetime(date,format="%Y-%m-%d")

maxT = df['MAX'].astype("float32")

minT = df['MIN'].astype("float32")

Number of rows = 365

Number of columns = 10

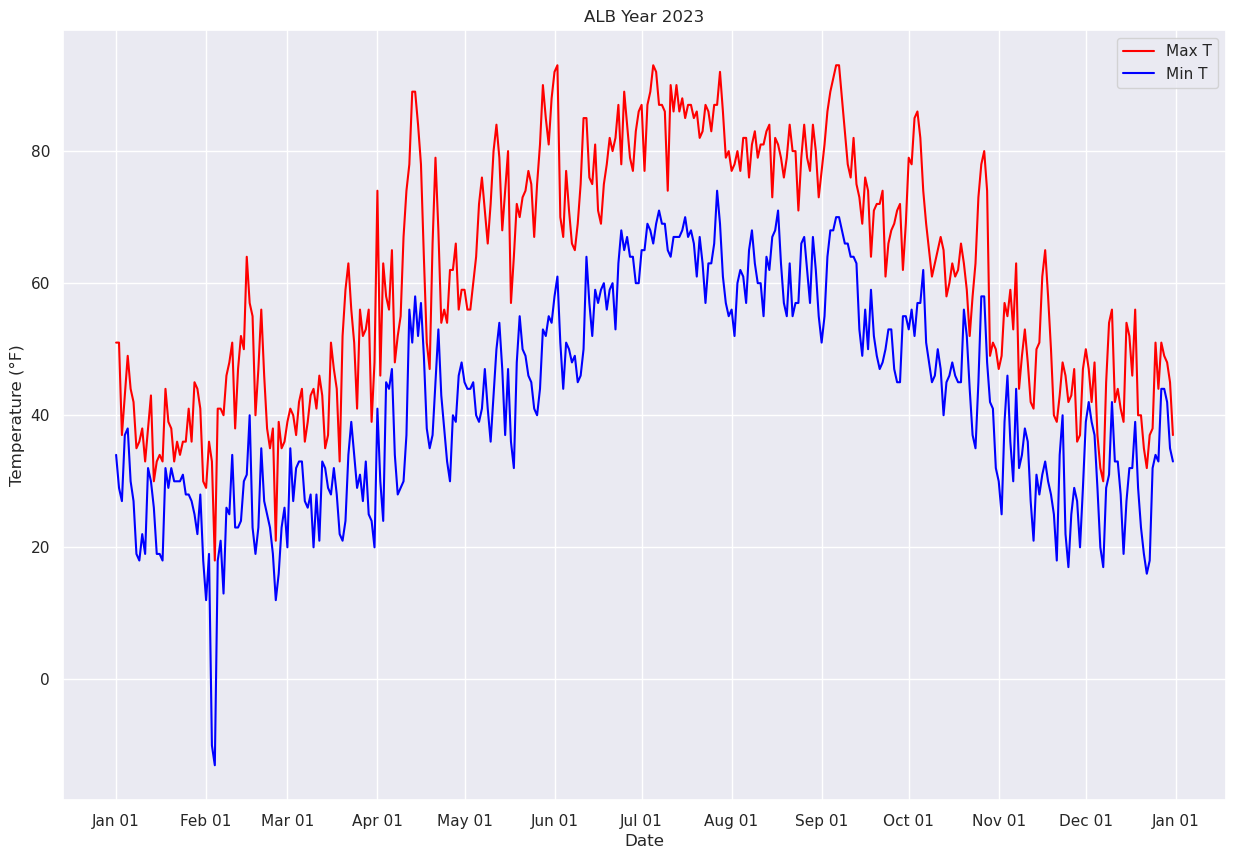

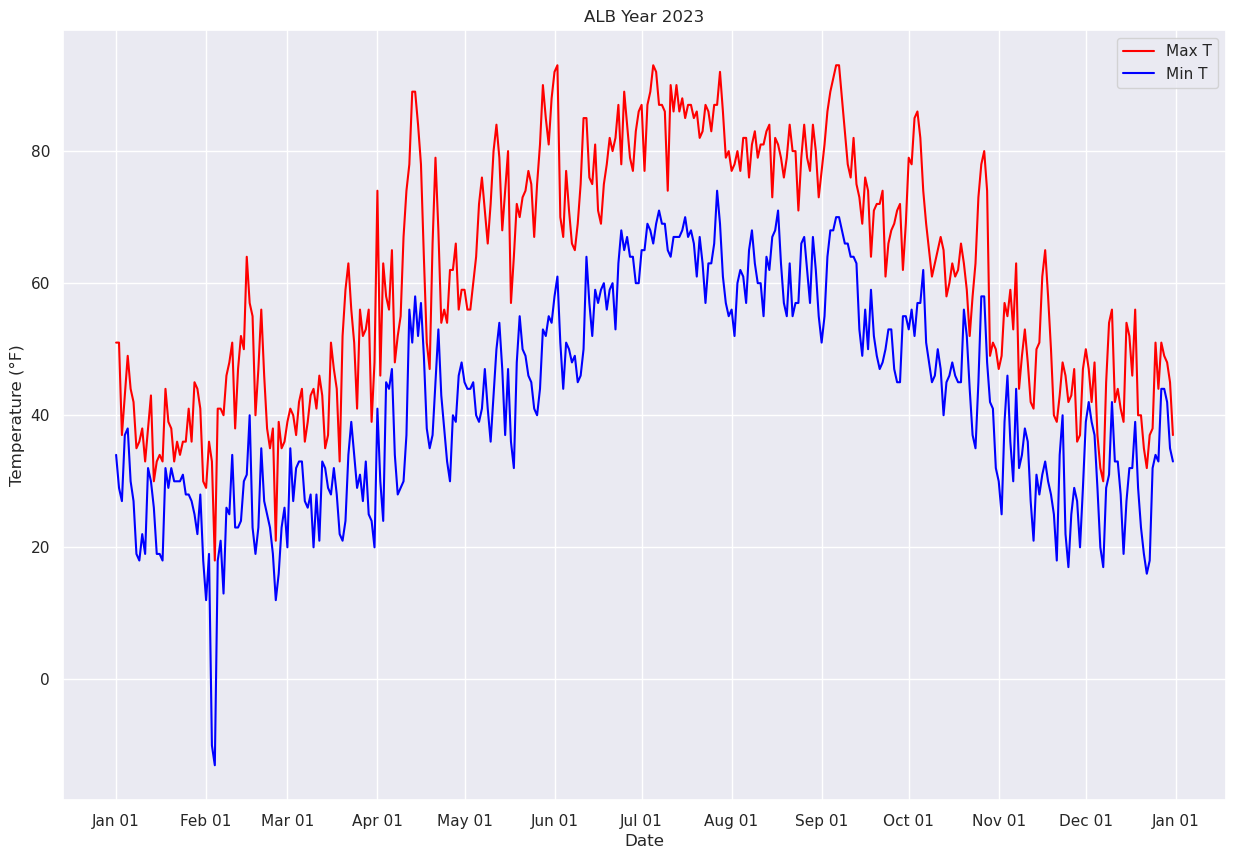

Let’s generate the final timeseries we made in our first Pandas notebook, with all the “bells and whistles” included.¶

from matplotlib.dates import DateFormatter, AutoDateLocator,HourLocator,DayLocator,MonthLocator

Set the year so we don’t have to edit the string labels every year!

year = 2023

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date, maxT, color='red',label = "Max T")

ax.plot (date, minT, color='blue', label = "Min T")

ax.set_title ("ALB Year %d" % year)

ax.set_xlabel('Date')

ax.set_ylabel('Temperature (°F)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a2203ffd0>

Read in precip data. This will be more challenging due to the presence of T(races).¶

Let’s remind ourselves what the Dataframe looks like, paying particular attention to the daily precip column (PCP).

df

| DATE | MAX | MIN | AVG | DEP | HDD | CDD | PCP | SNW | DPT | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2023-01-01 | 51 | 34 | 42.5 | 16.2 | 22 | 0 | 0.00 | 0.0 | 0 |

| 1 | 2023-01-02 | 51 | 29 | 40.0 | 13.9 | 25 | 0 | 0.00 | 0.0 | 0 |

| 2 | 2023-01-03 | 37 | 27 | 32.0 | 6.1 | 33 | 0 | 0.30 | 0.0 | 0 |

| 3 | 2023-01-04 | 43 | 37 | 40.0 | 14.3 | 25 | 0 | 0.08 | 0.0 | 0 |

| 4 | 2023-01-05 | 49 | 38 | 43.5 | 18.0 | 21 | 0 | 0.03 | 0.0 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 360 | 2023-12-27 | 51 | 44 | 47.5 | 20.1 | 17 | 0 | 0.30 | 0.0 | 0 |

| 361 | 2023-12-28 | 49 | 44 | 46.5 | 19.3 | 18 | 0 | 0.17 | 0.0 | 0 |

| 362 | 2023-12-29 | 48 | 42 | 45.0 | 18.1 | 20 | 0 | 0.15 | 0.0 | 0 |

| 363 | 2023-12-30 | 45 | 35 | 40.0 | 13.3 | 25 | 0 | 0.05 | 0.0 | 0 |

| 364 | 2023-12-31 | 37 | 33 | 35.0 | 8.5 | 30 | 0 | T | T | 0 |

365 rows × 10 columns

DataSeries called precip and populate it with the requisite column from our Dataframe. Then print out its values.# %load /spare11/atm350/common/feb22/02a.py

precip = df['PCP']

precip

0 0.00

1 0.00

2 0.30

3 0.08

4 0.03

...

360 0.30

361 0.17

362 0.15

363 0.05

364 T

Name: PCP, Length: 365, dtype: string

The task now is to convert these values from strings to floating point values. Our task is more complicated due to the presence of strings that are clearly not numerical … such as “T” for trace.¶

As we did in the first Pandas notebook with max temperatures greater than or equal to 90, create a subset of our Dataframe that consists only of those days where precip was a trace.¶

traceDays = df[precip=='T']

traceDays

| DATE | MAX | MIN | AVG | DEP | HDD | CDD | PCP | SNW | DPT | |

|---|---|---|---|---|---|---|---|---|---|---|

| 8 | 2023-01-09 | 36 | 18 | 27.0 | 2.2 | 38 | 0 | T | T | 0 |

| 9 | 2023-01-10 | 38 | 22 | 30.0 | 5.4 | 35 | 0 | T | T | 0 |

| 13 | 2023-01-14 | 30 | 26 | 28.0 | 3.9 | 37 | 0 | T | T | T |

| 20 | 2023-01-21 | 33 | 30 | 31.5 | 7.9 | 33 | 0 | T | T | T |

| 23 | 2023-01-24 | 36 | 31 | 33.5 | 9.9 | 31 | 0 | T | T | 7 |

| 26 | 2023-01-27 | 36 | 27 | 31.5 | 7.8 | 33 | 0 | T | T | 5 |

| 27 | 2023-01-28 | 45 | 25 | 35.0 | 11.3 | 30 | 0 | T | T | 4 |

| 30 | 2023-01-31 | 30 | 18 | 24.0 | 0.0 | 41 | 0 | T | 0.1 | 2 |

| 33 | 2023-02-03 | 33 | -10 | 11.5 | -12.8 | 53 | 0 | T | T | 2 |

| 40 | 2023-02-10 | 51 | 34 | 42.5 | 17.0 | 22 | 0 | T | 0.0 | 0 |

| 41 | 2023-02-11 | 38 | 23 | 30.5 | 4.8 | 34 | 0 | T | T | 0 |

| 43 | 2023-02-13 | 52 | 24 | 38.0 | 11.8 | 27 | 0 | T | 0.0 | 0 |

| 45 | 2023-02-15 | 64 | 31 | 47.5 | 20.8 | 17 | 0 | T | 0.0 | 0 |

| 46 | 2023-02-16 | 57 | 40 | 48.5 | 21.6 | 16 | 0 | T | 0.0 | 0 |

| 48 | 2023-02-18 | 40 | 19 | 29.5 | 2.0 | 35 | 0 | T | T | 0 |

| 54 | 2023-02-24 | 38 | 19 | 28.5 | -0.7 | 36 | 0 | T | T | 2 |

| 56 | 2023-02-26 | 39 | 16 | 27.5 | -2.3 | 37 | 0 | T | T | 4 |

| 63 | 2023-03-05 | 42 | 33 | 37.5 | 5.6 | 27 | 0 | T | 0.0 | 6 |

| 65 | 2023-03-07 | 36 | 27 | 31.5 | -1.1 | 33 | 0 | T | T | 2 |

| 67 | 2023-03-09 | 43 | 28 | 35.5 | 2.3 | 29 | 0 | T | T | 1 |

| 76 | 2023-03-18 | 44 | 28 | 36.0 | -0.3 | 29 | 0 | T | 0.3 | 2 |

| 77 | 2023-03-19 | 33 | 22 | 27.5 | -9.1 | 37 | 0 | T | T | T |

| 91 | 2023-04-02 | 46 | 30 | 38.0 | -4.1 | 27 | 0 | T | 0.0 | 0 |

| 105 | 2023-04-16 | 78 | 57 | 67.5 | 19.2 | 0 | 3 | T | 0.0 | 0 |

| 107 | 2023-04-18 | 51 | 38 | 44.5 | -4.7 | 20 | 0 | T | 0.0 | 0 |

| 108 | 2023-04-19 | 47 | 35 | 41.0 | -8.7 | 24 | 0 | T | 0.0 | 0 |

| 114 | 2023-04-25 | 54 | 33 | 43.5 | -8.8 | 21 | 0 | T | 0.0 | 0 |

| 123 | 2023-05-04 | 60 | 45 | 52.5 | -3.4 | 12 | 0 | T | 0.0 | 0 |

| 124 | 2023-05-05 | 64 | 40 | 52.0 | -4.2 | 13 | 0 | T | 0.0 | 0 |

| 126 | 2023-05-07 | 76 | 41 | 58.5 | 1.6 | 6 | 0 | T | 0.0 | 0 |

| 153 | 2023-06-03 | 70 | 51 | 60.5 | -4.4 | 4 | 0 | T | 0.0 | 0 |

| 160 | 2023-06-10 | 75 | 46 | 60.5 | -6.5 | 4 | 0 | T | 0.0 | 0 |

| 162 | 2023-06-12 | 85 | 64 | 74.5 | 7.0 | 0 | 10 | T | 0.0 | 0 |

| 173 | 2023-06-23 | 87 | 63 | 75.0 | 4.5 | 0 | 10 | T | 0.0 | 0 |

| 175 | 2023-06-25 | 89 | 65 | 77.0 | 6.1 | 0 | 12 | T | 0.0 | 0 |

| 220 | 2023-08-09 | 83 | 63 | 73.0 | 0.7 | 0 | 8 | T | 0.0 | 0 |

| 227 | 2023-08-16 | 82 | 68 | 75.0 | 3.4 | 0 | 10 | T | 0.0 | 0 |

| 230 | 2023-08-19 | 76 | 57 | 66.5 | -4.7 | 0 | 2 | T | 0.0 | 0 |

| 232 | 2023-08-21 | 84 | 63 | 73.5 | 2.6 | 0 | 9 | T | 0.0 | 0 |

| 256 | 2023-09-14 | 73 | 53 | 63.0 | -1.3 | 2 | 0 | T | 0.0 | 0 |

| 261 | 2023-09-19 | 71 | 52 | 61.5 | -0.8 | 3 | 0 | T | 0.0 | 0 |

| 282 | 2023-10-10 | 63 | 46 | 54.5 | 1.0 | 10 | 0 | T | 0.0 | 0 |

| 288 | 2023-10-16 | 63 | 48 | 55.5 | 4.3 | 9 | 0 | T | 0.0 | 0 |

| 290 | 2023-10-18 | 62 | 45 | 53.5 | 3.0 | 11 | 0 | T | 0.0 | 0 |

| 298 | 2023-10-26 | 78 | 58 | 68.0 | 20.3 | 0 | 3 | T | 0.0 | 0 |

| 307 | 2023-11-04 | 55 | 46 | 50.5 | 6.0 | 14 | 0 | T | 0.0 | 0 |

| 322 | 2023-11-19 | 50 | 28 | 39.0 | -0.2 | 26 | 0 | T | 0.0 | 0 |

| 327 | 2023-11-24 | 46 | 22 | 34.0 | -3.4 | 31 | 0 | T | T | 0 |

| 331 | 2023-11-28 | 36 | 27 | 31.5 | -4.5 | 33 | 0 | T | T | T |

| 332 | 2023-11-29 | 37 | 20 | 28.5 | -7.2 | 36 | 0 | T | T | 0 |

| 346 | 2023-12-13 | 41 | 28 | 34.5 | 3.3 | 30 | 0 | T | 0.1 | 0 |

| 358 | 2023-12-25 | 51 | 34 | 42.5 | 14.6 | 22 | 0 | T | 0.0 | 0 |

| 364 | 2023-12-31 | 37 | 33 | 35.0 | 8.5 | 30 | 0 | T | T | 0 |

traceDays.shape

(53, 10)

traceDays.shape[0]

53

shape attribute.# %load /spare11/atm350/common/feb22/02b.py

print (df[precip=='T'].shape)

numTraceDays = df[precip == 'T'].shape[0]

print (f"The total # of days in Albany in {year} that had a trace of precipitation was {numTraceDays}")

(53, 10)

The total # of days in Albany in 2023 that had a trace of precipitation was 53

Getting back to our task of converting precip amounts from strings to floating point numbers, one thing we could do is to create a new array and populate it via a loop, where we’d use an if-else logical test to check for Trace values and set the precip value to 0.00 for each day accordingly.¶

There is a more efficient way to do this, though!¶

We use the loc method of Pandas to find all elements of a DataSeries with a certain value, and then change that value to something else, all in the same line of code!¶

In this case, let’s set all values of ‘T’ to ‘0.00’¶

The line below is what we want! Before we execute it, let’s break it up into pieces.

df.loc[df['PCP'] =='T', ['PCP']] = '0.00'

First, create a Series of booleans corresponding to the specified condition.¶

df['PCP'] == 'T'

0 False

1 False

2 False

3 False

4 False

...

360 False

361 False

362 False

363 False

364 True

Name: PCP, Length: 365, dtype: boolean

Next, build on that cell by using loc to display all rows that correspond to the condition being True.¶

df.loc[df['PCP'] == 'T']

| DATE | MAX | MIN | AVG | DEP | HDD | CDD | PCP | SNW | DPT | |

|---|---|---|---|---|---|---|---|---|---|---|

| 8 | 2023-01-09 | 36 | 18 | 27.0 | 2.2 | 38 | 0 | T | T | 0 |

| 9 | 2023-01-10 | 38 | 22 | 30.0 | 5.4 | 35 | 0 | T | T | 0 |

| 13 | 2023-01-14 | 30 | 26 | 28.0 | 3.9 | 37 | 0 | T | T | T |

| 20 | 2023-01-21 | 33 | 30 | 31.5 | 7.9 | 33 | 0 | T | T | T |

| 23 | 2023-01-24 | 36 | 31 | 33.5 | 9.9 | 31 | 0 | T | T | 7 |

| 26 | 2023-01-27 | 36 | 27 | 31.5 | 7.8 | 33 | 0 | T | T | 5 |

| 27 | 2023-01-28 | 45 | 25 | 35.0 | 11.3 | 30 | 0 | T | T | 4 |

| 30 | 2023-01-31 | 30 | 18 | 24.0 | 0.0 | 41 | 0 | T | 0.1 | 2 |

| 33 | 2023-02-03 | 33 | -10 | 11.5 | -12.8 | 53 | 0 | T | T | 2 |

| 40 | 2023-02-10 | 51 | 34 | 42.5 | 17.0 | 22 | 0 | T | 0.0 | 0 |

| 41 | 2023-02-11 | 38 | 23 | 30.5 | 4.8 | 34 | 0 | T | T | 0 |

| 43 | 2023-02-13 | 52 | 24 | 38.0 | 11.8 | 27 | 0 | T | 0.0 | 0 |

| 45 | 2023-02-15 | 64 | 31 | 47.5 | 20.8 | 17 | 0 | T | 0.0 | 0 |

| 46 | 2023-02-16 | 57 | 40 | 48.5 | 21.6 | 16 | 0 | T | 0.0 | 0 |

| 48 | 2023-02-18 | 40 | 19 | 29.5 | 2.0 | 35 | 0 | T | T | 0 |

| 54 | 2023-02-24 | 38 | 19 | 28.5 | -0.7 | 36 | 0 | T | T | 2 |

| 56 | 2023-02-26 | 39 | 16 | 27.5 | -2.3 | 37 | 0 | T | T | 4 |

| 63 | 2023-03-05 | 42 | 33 | 37.5 | 5.6 | 27 | 0 | T | 0.0 | 6 |

| 65 | 2023-03-07 | 36 | 27 | 31.5 | -1.1 | 33 | 0 | T | T | 2 |

| 67 | 2023-03-09 | 43 | 28 | 35.5 | 2.3 | 29 | 0 | T | T | 1 |

| 76 | 2023-03-18 | 44 | 28 | 36.0 | -0.3 | 29 | 0 | T | 0.3 | 2 |

| 77 | 2023-03-19 | 33 | 22 | 27.5 | -9.1 | 37 | 0 | T | T | T |

| 91 | 2023-04-02 | 46 | 30 | 38.0 | -4.1 | 27 | 0 | T | 0.0 | 0 |

| 105 | 2023-04-16 | 78 | 57 | 67.5 | 19.2 | 0 | 3 | T | 0.0 | 0 |

| 107 | 2023-04-18 | 51 | 38 | 44.5 | -4.7 | 20 | 0 | T | 0.0 | 0 |

| 108 | 2023-04-19 | 47 | 35 | 41.0 | -8.7 | 24 | 0 | T | 0.0 | 0 |

| 114 | 2023-04-25 | 54 | 33 | 43.5 | -8.8 | 21 | 0 | T | 0.0 | 0 |

| 123 | 2023-05-04 | 60 | 45 | 52.5 | -3.4 | 12 | 0 | T | 0.0 | 0 |

| 124 | 2023-05-05 | 64 | 40 | 52.0 | -4.2 | 13 | 0 | T | 0.0 | 0 |

| 126 | 2023-05-07 | 76 | 41 | 58.5 | 1.6 | 6 | 0 | T | 0.0 | 0 |

| 153 | 2023-06-03 | 70 | 51 | 60.5 | -4.4 | 4 | 0 | T | 0.0 | 0 |

| 160 | 2023-06-10 | 75 | 46 | 60.5 | -6.5 | 4 | 0 | T | 0.0 | 0 |

| 162 | 2023-06-12 | 85 | 64 | 74.5 | 7.0 | 0 | 10 | T | 0.0 | 0 |

| 173 | 2023-06-23 | 87 | 63 | 75.0 | 4.5 | 0 | 10 | T | 0.0 | 0 |

| 175 | 2023-06-25 | 89 | 65 | 77.0 | 6.1 | 0 | 12 | T | 0.0 | 0 |

| 220 | 2023-08-09 | 83 | 63 | 73.0 | 0.7 | 0 | 8 | T | 0.0 | 0 |

| 227 | 2023-08-16 | 82 | 68 | 75.0 | 3.4 | 0 | 10 | T | 0.0 | 0 |

| 230 | 2023-08-19 | 76 | 57 | 66.5 | -4.7 | 0 | 2 | T | 0.0 | 0 |

| 232 | 2023-08-21 | 84 | 63 | 73.5 | 2.6 | 0 | 9 | T | 0.0 | 0 |

| 256 | 2023-09-14 | 73 | 53 | 63.0 | -1.3 | 2 | 0 | T | 0.0 | 0 |

| 261 | 2023-09-19 | 71 | 52 | 61.5 | -0.8 | 3 | 0 | T | 0.0 | 0 |

| 282 | 2023-10-10 | 63 | 46 | 54.5 | 1.0 | 10 | 0 | T | 0.0 | 0 |

| 288 | 2023-10-16 | 63 | 48 | 55.5 | 4.3 | 9 | 0 | T | 0.0 | 0 |

| 290 | 2023-10-18 | 62 | 45 | 53.5 | 3.0 | 11 | 0 | T | 0.0 | 0 |

| 298 | 2023-10-26 | 78 | 58 | 68.0 | 20.3 | 0 | 3 | T | 0.0 | 0 |

| 307 | 2023-11-04 | 55 | 46 | 50.5 | 6.0 | 14 | 0 | T | 0.0 | 0 |

| 322 | 2023-11-19 | 50 | 28 | 39.0 | -0.2 | 26 | 0 | T | 0.0 | 0 |

| 327 | 2023-11-24 | 46 | 22 | 34.0 | -3.4 | 31 | 0 | T | T | 0 |

| 331 | 2023-11-28 | 36 | 27 | 31.5 | -4.5 | 33 | 0 | T | T | T |

| 332 | 2023-11-29 | 37 | 20 | 28.5 | -7.2 | 36 | 0 | T | T | 0 |

| 346 | 2023-12-13 | 41 | 28 | 34.5 | 3.3 | 30 | 0 | T | 0.1 | 0 |

| 358 | 2023-12-25 | 51 | 34 | 42.5 | 14.6 | 22 | 0 | T | 0.0 | 0 |

| 364 | 2023-12-31 | 37 | 33 | 35.0 | 8.5 | 30 | 0 | T | T | 0 |

Further build this line of code by only returning the column of interest.¶

df.loc[df['PCP'] =='T', ['PCP']]

| PCP | |

|---|---|

| 8 | T |

| 9 | T |

| 13 | T |

| 20 | T |

| 23 | T |

| 26 | T |

| 27 | T |

| 30 | T |

| 33 | T |

| 40 | T |

| 41 | T |

| 43 | T |

| 45 | T |

| 46 | T |

| 48 | T |

| 54 | T |

| 56 | T |

| 63 | T |

| 65 | T |

| 67 | T |

| 76 | T |

| 77 | T |

| 91 | T |

| 105 | T |

| 107 | T |

| 108 | T |

| 114 | T |

| 123 | T |

| 124 | T |

| 126 | T |

| 153 | T |

| 160 | T |

| 162 | T |

| 173 | T |

| 175 | T |

| 220 | T |

| 227 | T |

| 230 | T |

| 232 | T |

| 256 | T |

| 261 | T |

| 282 | T |

| 288 | T |

| 290 | T |

| 298 | T |

| 307 | T |

| 322 | T |

| 327 | T |

| 331 | T |

| 332 | T |

| 346 | T |

| 358 | T |

| 364 | T |

Finally, we have arrived at the full line of code! Take the column of interest, in this case precip only on those days where a trace was measured, and set its value to 0.00.¶

df.loc[df['PCP'] =='T', ['PCP']] = '0.00'

df['PCP']

0 0.00

1 0.00

2 0.30

3 0.08

4 0.03

...

360 0.30

361 0.17

362 0.15

363 0.05

364 0.00

Name: PCP, Length: 365, dtype: string

This operation actually modifies the Dataframe in place . We can prove this by printing out a row from a date that we know had a trace amount.¶

But first how do we simply print a specific row from a dataframe? Since we know that Jan. 9 had a trace of precip, try this:¶

jan09 = df['DATE'] == '2023-01-09'

jan09

0 False

1 False

2 False

3 False

4 False

...

360 False

361 False

362 False

363 False

364 False

Name: DATE, Length: 365, dtype: boolean

That produces a series of booleans; the one matching our condition is True. Now we can retrieve all the values for this date.¶

df[jan09]

| DATE | MAX | MIN | AVG | DEP | HDD | CDD | PCP | SNW | DPT | |

|---|---|---|---|---|---|---|---|---|---|---|

| 8 | 2023-01-09 | 36 | 18 | 27.0 | 2.2 | 38 | 0 | 0.00 | T | 0 |

We see that the precip has now been set to 0.00.¶

Having done this check, and thus re-set the values, let’s now convert this series into floating point values.¶

precip = df['PCP'].astype("float32")

precip

0 0.00

1 0.00

2 0.30

3 0.08

4 0.03

...

360 0.30

361 0.17

362 0.15

363 0.05

364 0.00

Name: PCP, Length: 365, dtype: float32

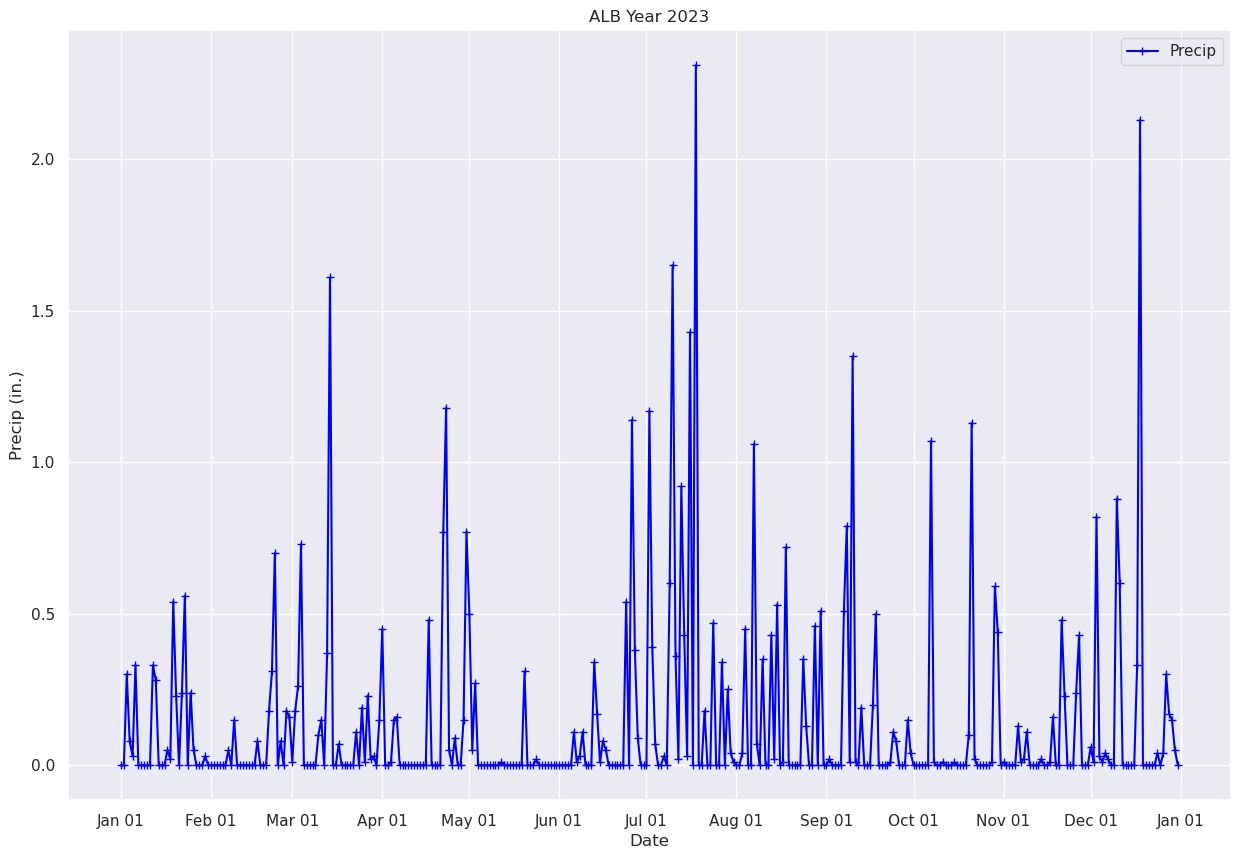

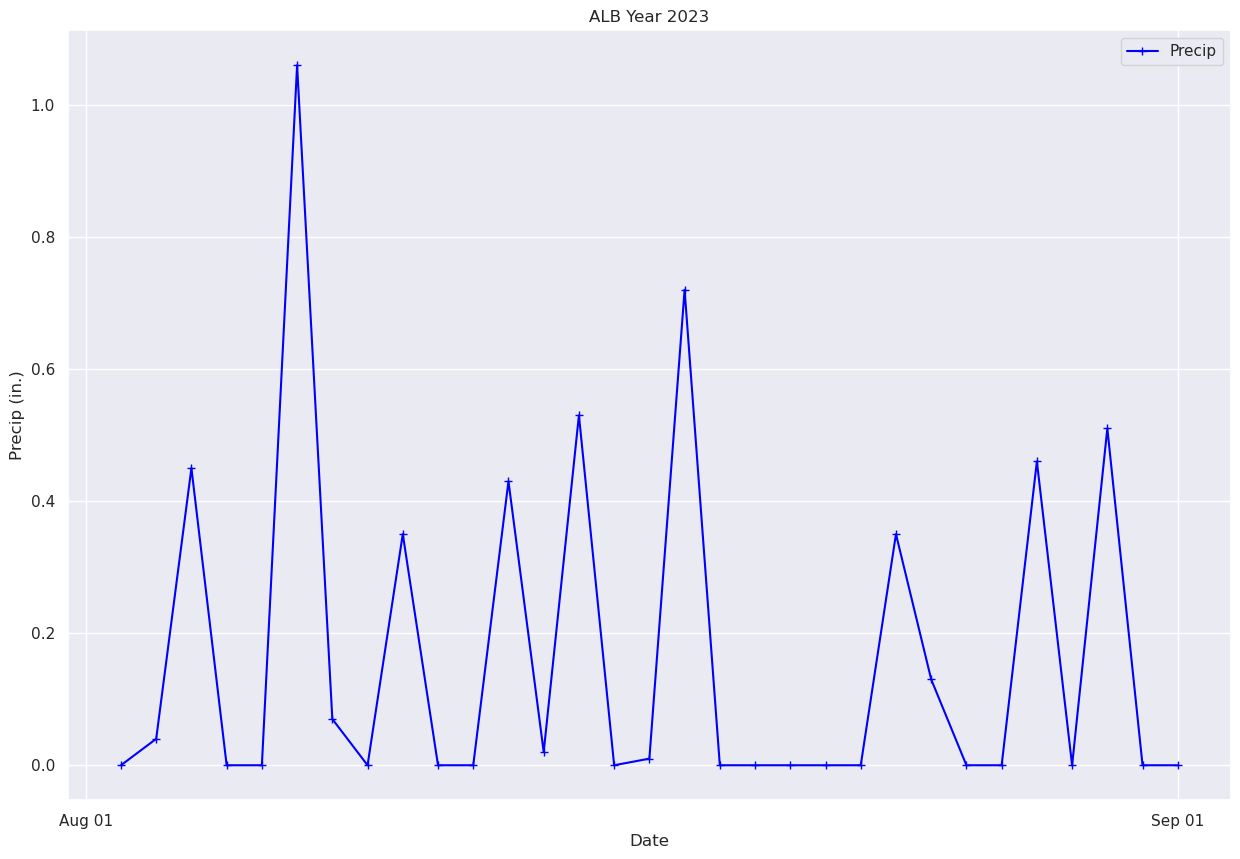

Plot each day’s precip total.¶

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date, precip, color='blue', marker='+',label = "Precip")

ax.set_title (f"ALB Year {year}")

ax.set_xlabel('Date')

ax.set_ylabel('Precip (in.)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a21ecccd0>

# %load /spare11/atm350/common/feb22/02a.py

precipT = df['PCP']

What if we just want to pick a certain time range? One simple way is to just pass in a subset of our x and y to the plot method.¶

# Plot out just the trace for October. Corresponds to Julian days 214-245 ... thus, indices 213-244 (why?).

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date[213:244], precip[213:244], color='blue', marker='+',label = "Precip")

ax.set_title (f"ALB Year {year}")

ax.set_xlabel('Date')

ax.set_ylabel('Precip (in.)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a21f5ccd0>

# %load '/spare11/atm350/common/feb22/02c.py'

wetDays = df[precip>=1.00]

wetDays

| DATE | MAX | MIN | AVG | DEP | HDD | CDD | PCP | SNW | DPT | |

|---|---|---|---|---|---|---|---|---|---|---|

| 72 | 2023-03-14 | 35 | 32 | 33.5 | -1.4 | 31 | 0 | 1.61 | 9.9 | 6 |

| 112 | 2023-04-23 | 54 | 43 | 48.5 | -2.9 | 16 | 0 | 1.18 | 0.0 | 0 |

| 176 | 2023-06-26 | 84 | 67 | 75.5 | 4.3 | 0 | 11 | 1.14 | 0.0 | 0 |

| 182 | 2023-07-02 | 77 | 65 | 71.0 | -1.3 | 0 | 6 | 1.17 | 0.0 | 0 |

| 190 | 2023-07-10 | 74 | 65 | 69.5 | -3.7 | 0 | 5 | 1.65 | 0.0 | 0 |

| 196 | 2023-07-16 | 85 | 70 | 77.5 | 4.0 | 0 | 13 | 1.43 | 0.0 | 0 |

| 198 | 2023-07-18 | 87 | 68 | 77.5 | 4.0 | 0 | 13 | 2.31 | 0.0 | 0 |

| 218 | 2023-08-07 | 76 | 65 | 70.5 | -2.0 | 0 | 6 | 1.06 | 0.0 | 0 |

| 252 | 2023-09-10 | 78 | 66 | 72.0 | 6.2 | 0 | 7 | 1.35 | 0.0 | 0 |

| 279 | 2023-10-07 | 69 | 51 | 60.0 | 5.3 | 5 | 0 | 1.07 | 0.0 | 0 |

| 293 | 2023-10-21 | 59 | 52 | 55.5 | 6.1 | 9 | 0 | 1.13 | 0.0 | 0 |

| 351 | 2023-12-18 | 56 | 39 | 47.5 | 17.8 | 17 | 0 | 2.13 | 0.0 | 0 |

# Split the cell here so the table above will be displayed!

numWetDays = wetDays.shape[0]

print (f"The total # of days in Albany in {year} that had at least 1.00 in. of precip was {numWetDays}" )

The total # of days in Albany in 2023 that had at least 1.00 in. of precip was 12

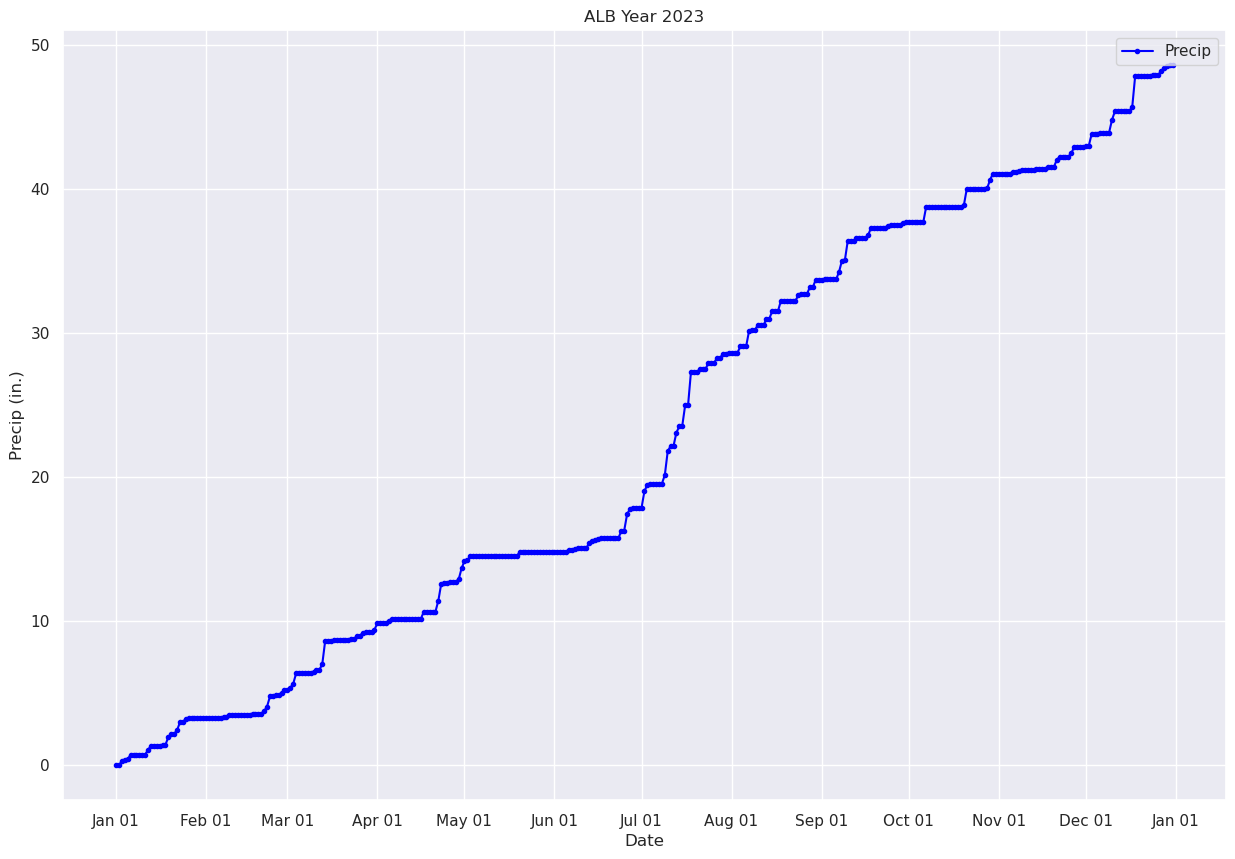

Pandas has a function to compute the cumulative sum of a series. We’ll use it to compute and graph Albany’s total precip over the year.¶

precipTotal = precip.cumsum()

precipTotal

0 0.000000

1 0.000000

2 0.300000

3 0.380000

4 0.410000

...

360 48.249989

361 48.419987

362 48.569988

363 48.619987

364 48.619987

Name: PCP, Length: 365, dtype: float32

We can see that the final total is in the last element of the precipTotal array. How can we explicitly print out just this value?¶

One of the methods available to us in a Pandas DataSeries is values. Let’s display it:¶

precipTotal.values

array([ 0. , 0. , 0.3 , 0.38 , 0.41 ,

0.74 , 0.74 , 0.74 , 0.74 , 0.74 ,

0.74 , 1.07 , 1.35 , 1.35 , 1.35 ,

1.35 , 1.4 , 1.42 , 1.96 , 2.19 ,

2.19 , 2.43 , 2.99 , 2.99 , 3.23 ,

3.28 , 3.28 , 3.28 , 3.28 , 3.31 ,

3.31 , 3.31 , 3.31 , 3.31 , 3.31 ,

3.31 , 3.31 , 3.36 , 3.36 , 3.51 ,

3.51 , 3.51 , 3.51 , 3.51 , 3.51 ,

3.51 , 3.51 , 3.59 , 3.59 , 3.59 ,

3.59 , 3.77 , 4.08 , 4.7799997, 4.7799997,

4.8599997, 4.8599997, 5.0399995, 5.1999993, 5.2099996,

5.3899994, 5.6499996, 6.3799996, 6.3799996, 6.3799996,

6.3799996, 6.3799996, 6.3799996, 6.4799995, 6.6299996,

6.6299996, 6.9999995, 8.61 , 8.61 , 8.61 ,

8.679999 , 8.679999 , 8.679999 , 8.679999 , 8.679999 ,

8.679999 , 8.789999 , 8.789999 , 8.979999 , 8.989999 ,

9.219998 , 9.239999 , 9.269999 , 9.269999 , 9.419998 ,

9.869998 , 9.869998 , 9.869998 , 9.879998 , 10.029998 ,

10.189998 , 10.189998 , 10.189998 , 10.189998 , 10.189998 ,

10.189998 , 10.189998 , 10.189998 , 10.189998 , 10.189998 ,

10.189998 , 10.669997 , 10.669997 , 10.669997 , 10.669997 ,

10.669997 , 11.439997 , 12.619997 , 12.669997 , 12.669997 ,

12.759997 , 12.759997 , 12.759997 , 12.909997 , 13.6799965,

14.1799965, 14.229997 , 14.499997 , 14.499997 , 14.499997 ,

14.499997 , 14.499997 , 14.499997 , 14.499997 , 14.499997 ,

14.499997 , 14.509997 , 14.509997 , 14.509997 , 14.509997 ,

14.509997 , 14.509997 , 14.509997 , 14.509997 , 14.819998 ,

14.819998 , 14.819998 , 14.819998 , 14.839998 , 14.839998 ,

14.839998 , 14.839998 , 14.839998 , 14.839998 , 14.839998 ,

14.839998 , 14.839998 , 14.839998 , 14.839998 , 14.839998 ,

14.839998 , 14.949998 , 14.959998 , 14.989998 , 15.0999975,

15.0999975, 15.0999975, 15.0999975, 15.439998 , 15.609998 ,

15.619998 , 15.699998 , 15.749998 , 15.749998 , 15.749998 ,

15.749998 , 15.749998 , 15.749998 , 15.749998 , 16.289999 ,

16.289999 , 17.429998 , 17.809998 , 17.899998 , 17.899998 ,

17.899998 , 17.899998 , 19.069998 , 19.459997 , 19.529997 ,

19.529997 , 19.529997 , 19.559998 , 19.559998 , 20.159998 ,

21.809998 , 22.169998 , 22.189999 , 23.109999 , 23.539999 ,

23.57 , 25. , 25. , 27.31 , 27.31 ,

27.31 , 27.49 , 27.49 , 27.49 , 27.96 ,

27.96 , 27.96 , 28.3 , 28.3 , 28.55 ,

28.59 , 28.6 , 28.6 , 28.6 , 28.640001 ,

29.090002 , 29.090002 , 29.090002 , 30.150002 , 30.220001 ,

30.220001 , 30.570002 , 30.570002 , 30.570002 , 31.000002 ,

31.020002 , 31.550003 , 31.550003 , 31.560003 , 32.280003 ,

32.280003 , 32.280003 , 32.280003 , 32.280003 , 32.280003 ,

32.63 , 32.760002 , 32.760002 , 32.760002 , 33.22 ,

33.22 , 33.73 , 33.73 , 33.73 , 33.75 ,

33.75 , 33.75 , 33.75 , 33.75 , 34.26 ,

35.05 , 35.059998 , 36.409996 , 36.419994 , 36.419994 ,

36.609993 , 36.609993 , 36.609993 , 36.609993 , 36.809994 ,

37.309994 , 37.309994 , 37.309994 , 37.309994 , 37.309994 ,

37.319992 , 37.429993 , 37.509995 , 37.509995 , 37.509995 ,

37.509995 , 37.659996 , 37.699997 , 37.699997 , 37.699997 ,

37.699997 , 37.699997 , 37.699997 , 37.699997 , 38.769997 ,

38.779995 , 38.779995 , 38.779995 , 38.789993 , 38.789993 ,

38.789993 , 38.789993 , 38.79999 , 38.79999 , 38.79999 ,

38.79999 , 38.79999 , 38.89999 , 40.02999 , 40.04999 ,

40.04999 , 40.04999 , 40.04999 , 40.04999 , 40.04999 ,

40.05999 , 40.64999 , 41.08999 , 41.08999 , 41.099987 ,

41.099987 , 41.099987 , 41.099987 , 41.099987 , 41.22999 ,

41.239986 , 41.259987 , 41.369987 , 41.369987 , 41.369987 ,

41.369987 , 41.369987 , 41.389988 , 41.389988 , 41.389988 ,

41.399986 , 41.559986 , 41.559986 , 41.559986 , 42.039986 ,

42.269985 , 42.269985 , 42.269985 , 42.269985 , 42.509987 ,

42.939987 , 42.939987 , 42.939987 , 42.939987 , 42.99999 ,

43.009987 , 43.829987 , 43.859985 , 43.869984 , 43.909985 ,

43.929985 , 43.929985 , 43.929985 , 44.809986 , 45.409985 ,

45.409985 , 45.409985 , 45.409985 , 45.409985 , 45.409985 ,

45.739986 , 47.869987 , 47.869987 , 47.869987 , 47.869987 ,

47.869987 , 47.869987 , 47.90999 , 47.90999 , 47.94999 ,

48.24999 , 48.419987 , 48.56999 , 48.619987 , 48.619987 ],

dtype=float32)

# %load '/spare11/atm350/common/feb22/02d.py'

# Print out the value of the last element in the array

precipTotal.values[-1]

48.619987

Plot the timeseries of the cumulative precip for Albany over the year.¶

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date, precipTotal, color='blue', marker='.',label = "Precip")

ax.set_title (f"ALB Year {year}")

ax.set_xlabel('Date')

ax.set_ylabel('Precip (in.)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a21eae690>

Pandas has a plethora of statistical analysis methods to apply on tabular data. An excellent summary method is describe.¶

maxT.describe()

count 365.000000

mean 61.879452

std 18.183035

min 18.000000

25% 46.000000

50% 63.000000

75% 79.000000

max 93.000000

Name: MAX, dtype: float64

minT.describe()

count 365.000000

mean 42.816437

std 16.101021

min -13.000000

25% 30.000000

50% 44.000000

75% 57.000000

max 74.000000

Name: MIN, dtype: float64

precip.describe()

count 365.000000

mean 0.133205

std 0.306733

min 0.000000

25% 0.000000

50% 0.000000

75% 0.110000

max 2.310000

Name: PCP, dtype: float64

- First, express the condition where precip is equal to 0.00.

- Then, determine the # of rows of that resulting series.

# %load /spare11/atm350/common/feb22/02e.py

subset = precip[precip == 0.00]

nRows = subset.shape[0]

print (f"The number of days where precip was a trace or less was {nRows}")

print ("Since this is represents more than half the days of the years, the median must = 0")

The number of days where precip was a trace or less was 209

Since this is represents more than half the days of the years, the median must = 0

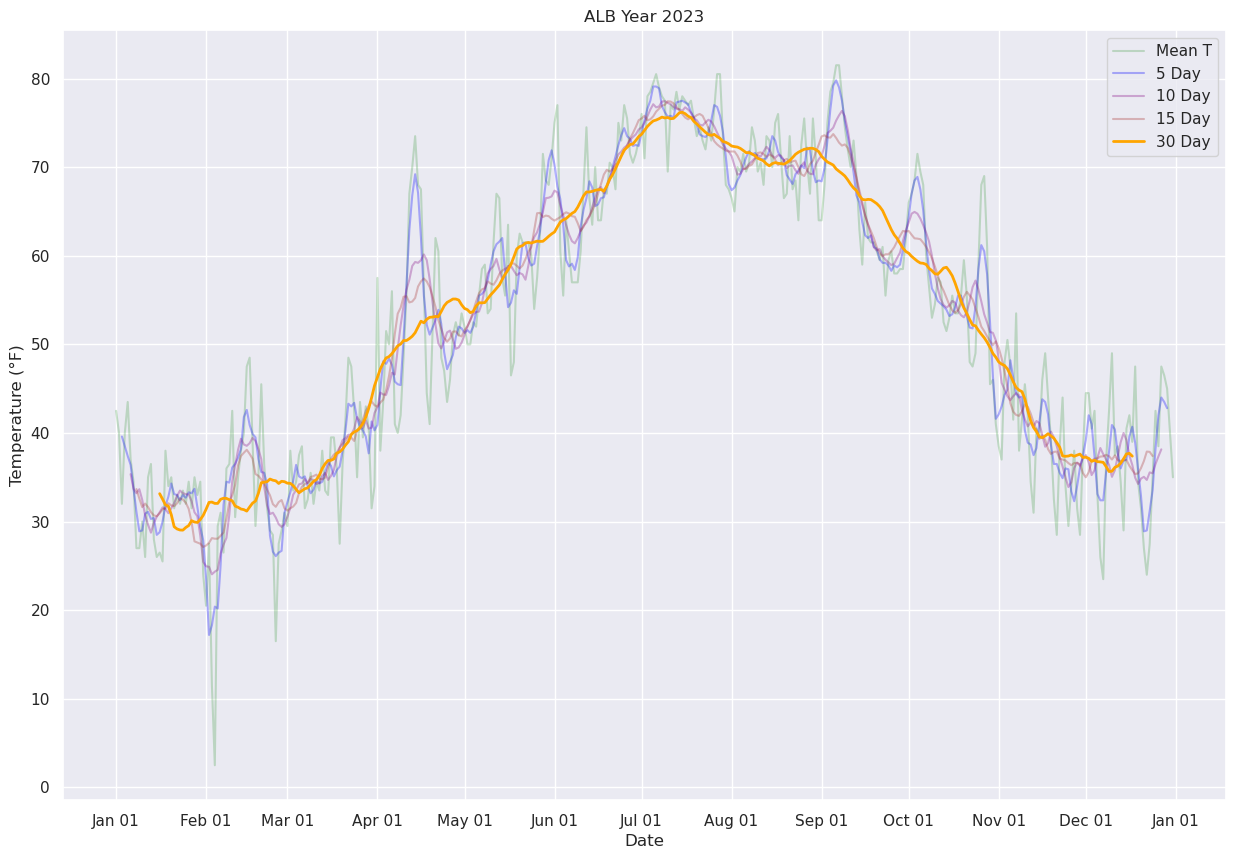

We’ll wrap up by calculating and then plotting rolling means over a period of days in the year, in order to smooth out the day-to-day variations.¶

First, let’s replot the max and min temperature trace for the entire year, day-by-day.

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date, maxT, color='red',label = "Max T")

ax.plot (date, minT, color='blue', label = "Min T")

ax.set_title (f"ALB Year {year}")

ax.set_xlabel('Date')

ax.set_ylabel('Temperature (°F)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a21a5ccd0>

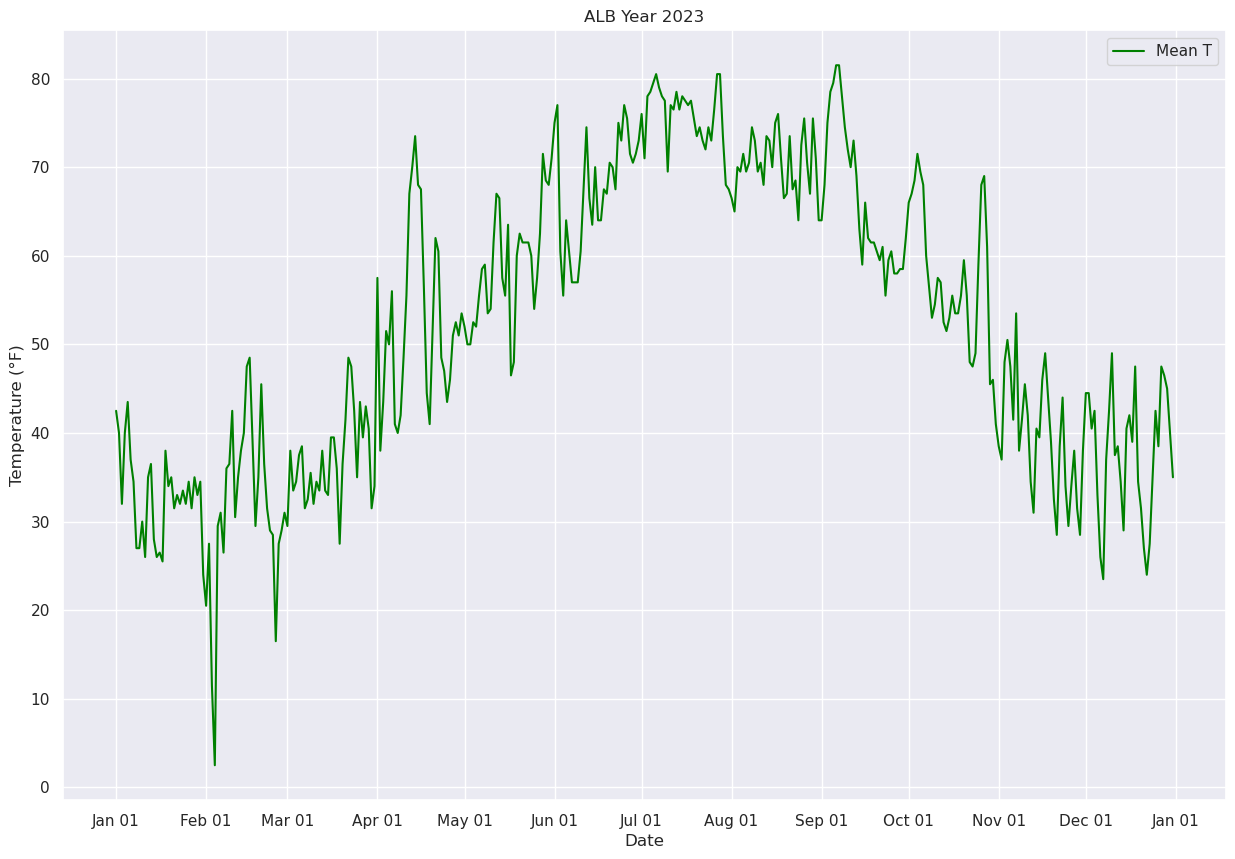

Now, let’s calculate and plot the daily mean temperature.

meanT = (maxT + minT) / 2.

meanT

0 42.5

1 40.0

2 32.0

3 40.0

4 43.5

...

360 47.5

361 46.5

362 45.0

363 40.0

364 35.0

Length: 365, dtype: float32

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date, meanT, color='green',label = "Mean T")

ax.set_title (f"ALB Year {year}")

ax.set_xlabel('Date')

ax.set_ylabel('Temperature (°F)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a19219f50>

Next, let’s use Pandas’ rolling method to calculate the mean over a specified number of days. We’ll center the window at the midpoint of each period (thus, for a 30-day window, the first plotted point will be on Jan. 16 … covering the Jan. 1 –> Jan. 30 timeframe.

meanTr5 = meanT.rolling(window=5, center=True)

meanTr10 = meanT.rolling(window=10, center=True)

meanTr15 = meanT.rolling(window=15, center=True)

meanTr30 = meanT.rolling(window=30, center=True)

meanTr30.mean()

0 NaN

1 NaN

2 NaN

3 NaN

4 NaN

..

360 NaN

361 NaN

362 NaN

363 NaN

364 NaN

Length: 365, dtype: float64

meanTr5.mean()

0 NaN

1 NaN

2 39.6

3 38.5

4 37.4

...

360 44.0

361 43.5

362 42.8

363 NaN

364 NaN

Length: 365, dtype: float64

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date, meanT, color='green',label = "Mean T",alpha=0.2)

ax.plot (date, meanTr5.mean(), color='blue',label = "5 Day", alpha=0.3)

ax.plot (date, meanTr10.mean(), color='purple',label = "10 Day", alpha=0.3)

ax.plot (date, meanTr15.mean(), color='brown',label = "15 Day", alpha=0.3)

ax.plot (date, meanTr30.mean(), color='orange',label = "30 Day", alpha=1.0, linewidth=2)

ax.set_title (f"ALB Year {year}")

ax.set_xlabel('Date')

ax.set_ylabel('Temperature (°F)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a190d3050>

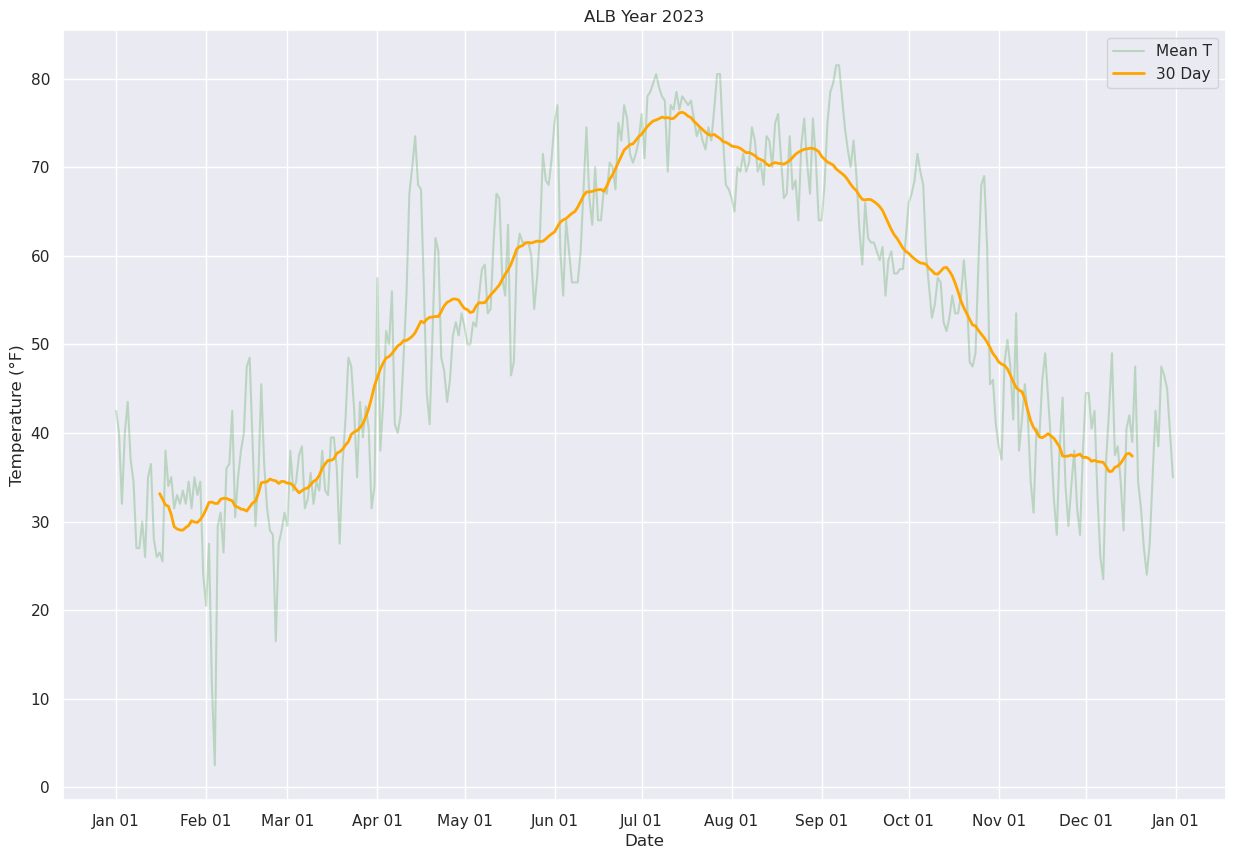

Display just the daily and 30-day running mean.

fig, ax = plt.subplots(figsize=(15,10))

ax.plot (date, meanT, color='green',label = "Mean T",alpha=0.2)

ax.plot (date, meanTr30.mean(), color='orange',label = "30 Day", alpha=1.0, linewidth=2)

ax.set_title (f"ALB Year {year}")

ax.set_xlabel('Date')

ax.set_ylabel('Temperature (°F)' )

ax.xaxis.set_major_locator(MonthLocator(interval=1))

dateFmt = DateFormatter('%b %d')

ax.xaxis.set_major_formatter(dateFmt)

ax.legend (loc="best")

<matplotlib.legend.Legend at 0x145a19121310>