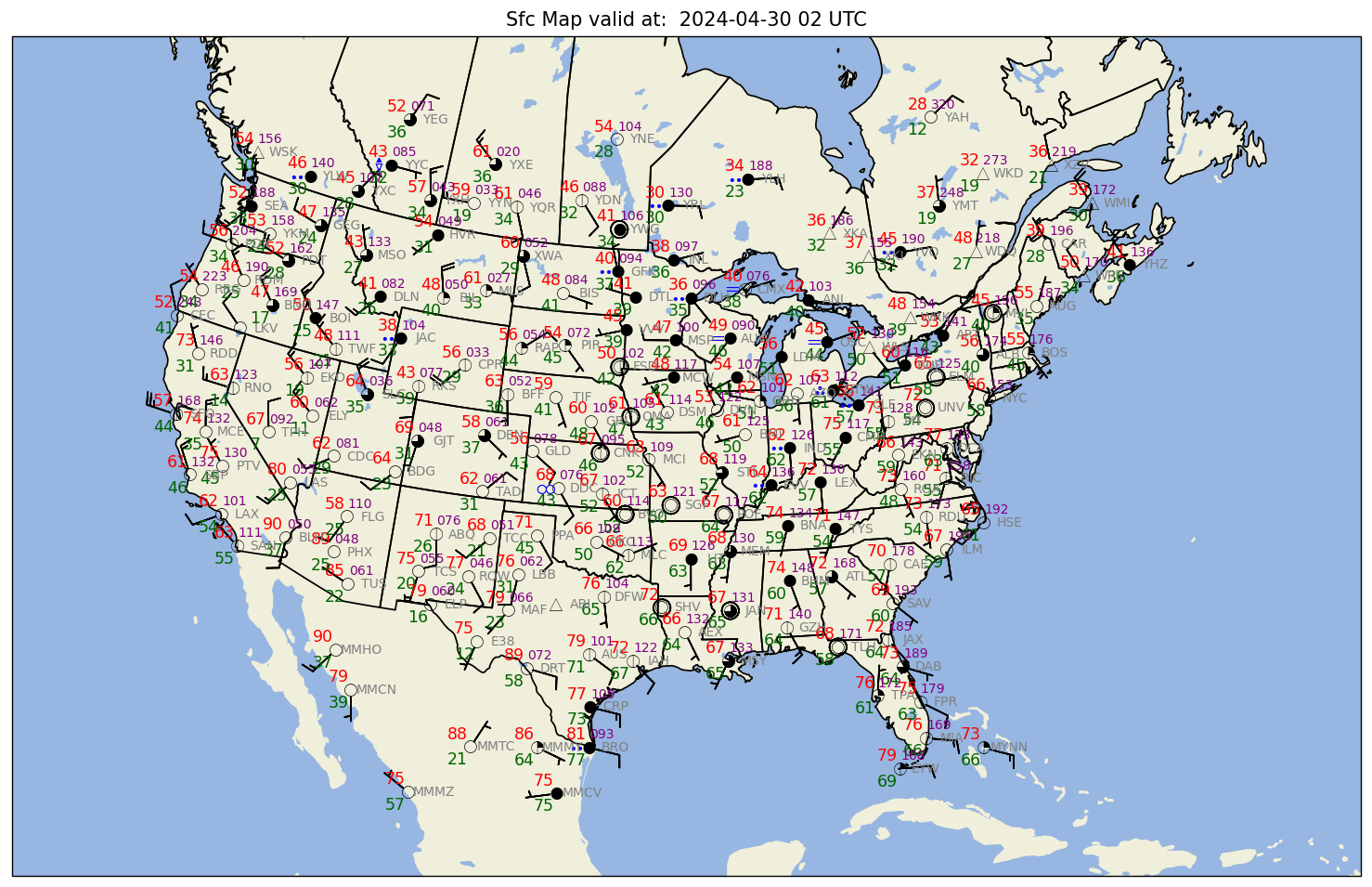

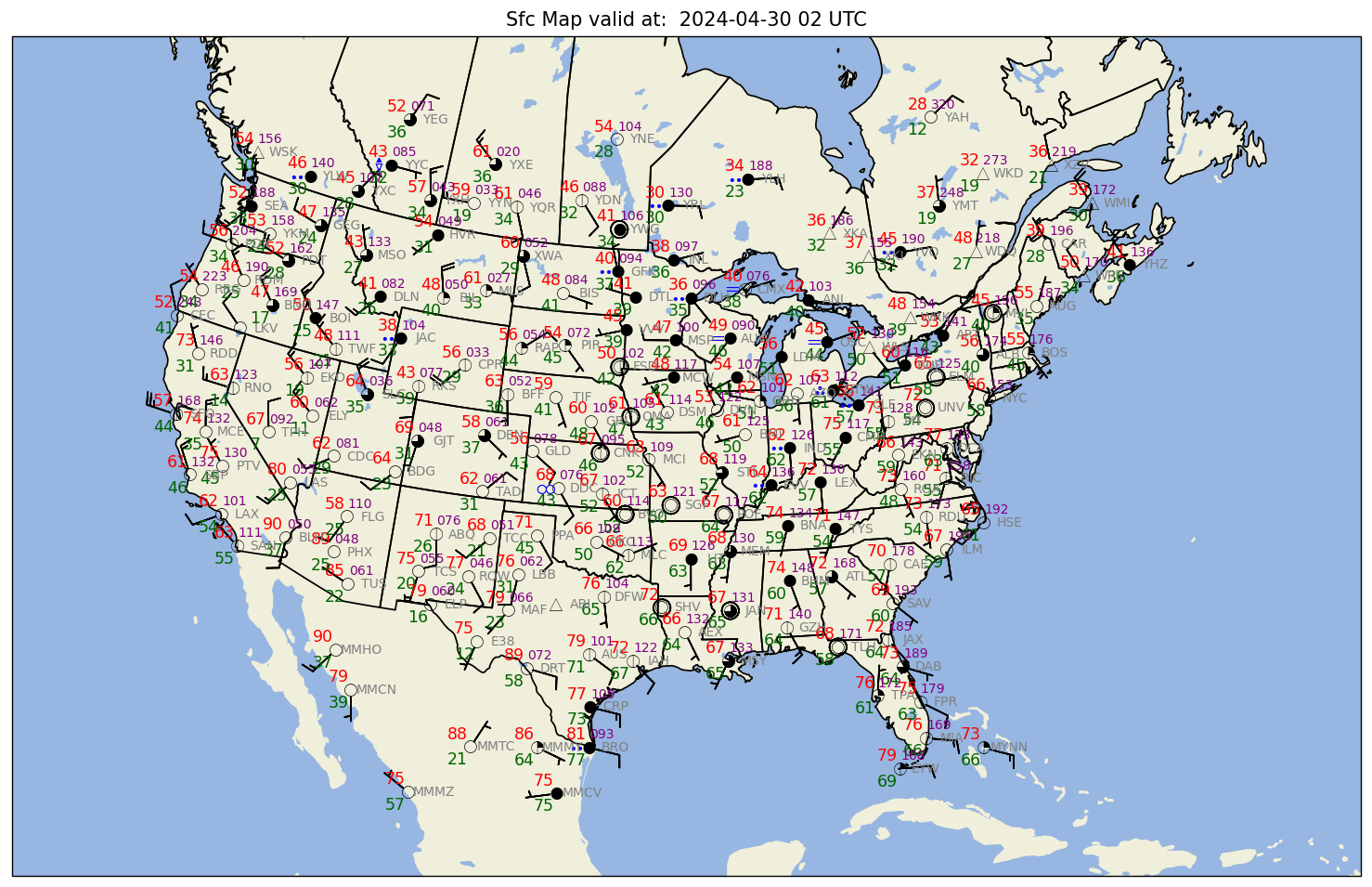

Surface map of METAR data

Contents

Surface map of METAR data¶

Table of Contents ¶

Objective

Strategy

Step 0: Import required packages

Step 1: Browse a THREDDS Data Server (TDS)

Step 2: Determine the date and hour to gather observations

Step 3: Surface observations

Step 3a: Determine catalog location for Surface observations

Step 3b: Subset Surface observations

Step 3c: Retrieve Surface observations

Step 3d: Process Surface observations

Step 3e: Visualize Surface observations

Objective¶

In this notebook, we will make a surface map based on current observations from METAR sites across North America.

Step 0: Import required packages ¶

Top

But first, we need to import all our required packages. Today we’re working with:

datetime

numpy

pandas

matplotlib

cartopy

metpy

siphon

We will also import a couple of functions that convert weather and cloud cover symbols from METAR files encoded in GEMPAK format.

from datetime import datetime,timedelta

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import cartopy.crs as ccrs

import cartopy.feature as cfeature

from metpy.calc import wind_components, reduce_point_density

from metpy.units import units

from metpy.plots import StationPlot

from metpy.plots.wx_symbols import current_weather, sky_cover, wx_code_map

from siphon.catalog import TDSCatalog

from siphon.ncss import NCSS

# Load in a collection of functions that process GEMPAK weather conditions and cloud cover data.

%run /kt11/ktyle/python/metargem.py

Step 1: Browse a THREDDS Data Server (TDS) ¶

Top

A THREDDS Data Server provides us with coherent access to a large collection of real-time and archived datasets from a variety of environmental data sources at a number of distributed server sites. Various institutions serve data via THREDDS, including our department. You can browse our department’s TDS in your web browser using this link:

http://thredds.atmos.albany.edu:8080/thredds

Let’s take a few moments to browse the catalog in a new tab of your web browser.

Step 2: Determine the date and hour to gather observations ¶

# Use the current time, or set your own for a past time.

# Set current to False if you want to specify a past time.

nowTime = datetime.utcnow()

current = True

#current = False

if (current):

validTime = datetime.utcnow()

year = validTime.year

month = validTime.month

day = validTime.day

hour = validTime.hour

else:

year = 2024

month = 1

day = 11

hour = 22

validTime = datetime(year, month, day, hour)

deltaTime = nowTime - validTime

deltaDays = deltaTime.days

timeStr = validTime.strftime("%Y-%m-%d %H UTC")

timeStr2 = validTime.strftime("%Y%m%d%H")

print(timeStr)

print(validTime)

print(deltaTime)

print(deltaDays)

2024-04-30 02 UTC

2024-04-30 02:00:00

0:07:01.973962

0

Step 3: Surface observations ¶

Step 3a: Determine catalog location for Surface observations ¶

Top

If the date is within the past week, use the current METAR data catalog. Otherwise, use the archive METAR data catalog.

if (deltaDays <= 7):

# First URL has a subset of stations, just over N. America

NAmCurrent = 'http://thredds.atmos.albany.edu:8080/thredds/catalog/metar/ncdecodedNAm/catalog.xml?dataset=metar/ncdecodedNAm/Metar_NAm_Station_Data_fc.cdmr'

# Second URL has all stations worldwide ... will take longer to load and you definitely need to adjust the point density in step 3d

WorldCurrent = 'http://thredds.atmos.albany.edu:8080/thredds/catalog/metar/ncdecoded/catalog.xml?dataset=metar/ncdecoded/Metar_Station_Data_fc.cdmr'

metar_cat_url = NAmCurrent

# metar_cat_url = WorldCurrent

else:

metar_cat_url = 'http://thredds.atmos.albany.edu:8080/thredds/catalog/metarArchive/ncdecoded/catalog.xml?dataset=metarArchive/ncdecoded/Archived_Metar_Station_Data_fc.cdmr'

Note:

In general, our METAR archive goes back two years. Requests for older dates may fail. If you need data for an earlier date, email Ross or Kevin.

# Create a TDS catalog object from the URL we created above, and examine it.

# The .cdmr extension represents a dataset that works especially well with irregularly-spaced point data sources, such as METAR sites.

catalog = TDSCatalog(metar_cat_url)

catalog

Feature Collection

# This tells us that there is only one dataset type within this catalog, namely a Feature Collection. We now create an object to store this dataset.

metar_dataset = catalog.datasets['Feature Collection']

This dataset consists of multiple hours and locations. We are only interested in a subset of this dataset; namely, the most recent hour, and sites within a geographic domain that we will define below. For this, we will exploit a feature of THREDDS that allows for easy subsetting … the NetCDF Subset Service (NCSS).¶

ncss_url = metar_dataset.access_urls['NetcdfSubset']

print(ncss_url)

# Import ncss client

ncss = NCSS(ncss_url)

http://thredds.atmos.albany.edu:8080/thredds/ncss/metar/ncdecodedNAm/Metar_NAm_Station_Data_fc.cdmr

One of the ncss object’s attributes is variables. Let’s look at that:

ncss.variables

{'ALTI',

'CEIL',

'CHC1',

'CHC2',

'CHC3',

'COUN',

'CTYH',

'CTYL',

'CTYM',

'DRCT',

'DWPC',

'GUST',

'MSUN',

'P01I',

'P03C',

'P03D',

'P03I',

'P06I',

'P24I',

'PMSL',

'SKNT',

'SNEW',

'SNOW',

'SPRI',

'STAT',

'STD2',

'STNM',

'T6NC',

'T6XC',

'TDNC',

'TDXC',

'TMPC',

'VSBY',

'WEQS',

'WNUM',

'_isMissing'}

These are abbreviations that the GEMPAK data analysis/visualization package uses for all the meteorological data potentially encoded into a METAR observation.

Step 3b: Subset Surface Observations ¶

Top

Now, let’s request all stations within a bounding box for a given time, select certain variables, and create a surface station plot¶

Make new NCSS query that specifies a regional domain and a particular time.

Request data closest to “now”, or specify a specific YYMMDDHH yourself.

Specify what variables to retreive

Set the domain to gather data from and for defining the plot region.¶

latN = 55

latS = 20

lonW = -125

lonE = -60

cLat = (latN + latS)/2

cLon = (lonW + lonE )/2

Step 3c: Retrieve Surface Observations ¶

Top

Create an object to build our data query

query = ncss.query()

query.lonlat_box(north=latN-.25, south=latS+0.5, east=lonE-.25, west=lonW+.25)

# We actually specify a time one minute earlier than the specified hour; this is a quirk of how the data is stored on the THREDDS server and ensures we will get data

# corresponding to the hour we specified

query.time(validTime - timedelta(minutes = 1))

# Select the variables to query. Note that the variable names depend on the source of the METAR data.

# The 'GEMPAK-like' 4-character names are from the UAlbany THREDDS.

query.variables('TMPC', 'DWPC', 'PMSL',

'SKNT', 'DRCT','ALTI','WNUM','VSBY','CHC1', 'CHC2', 'CHC3','CTYH', 'CTYM', 'CTYL' )

query.accept('csv')

var=CTYL&var=SKNT&var=CTYH&var=VSBY&var=CHC3&var=CHC1&var=PMSL&var=WNUM&var=DWPC&var=CTYM&var=TMPC&var=ALTI&var=DRCT&var=CHC2&time=2024-04-30T01%3A59%3A00&west=-124.75&east=-60.25&south=20.5&north=54.75&accept=csv

Pass the query to the THREDDS server’s NCSS service. We can then create a Pandas dataframe from the returned object.

data = ncss.get_data(query)

df = pd.DataFrame(data)

df

/knight/mamba_aug23/envs/jan24_env/lib/python3.11/site-packages/siphon/ncss.py:432: VisibleDeprecationWarning: Reading unicode strings without specifying the encoding argument is deprecated. Set the encoding, use None for the system default.

arrs = np.genfromtxt(fobj, dtype=None, names=names, delimiter=',',

| time | station | latitude | longitude | CTYL | SKNT | CTYH | VSBY | CHC3 | CHC1 | PMSL | WNUM | DWPC | CTYM | TMPC | ALTI | DRCT | CHC2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | b'2024-04-30T02:00:00Z' | b'ABI' | 32.419 | -99.680 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 29.840000 | NaN | NaN |

| 1 | b'2024-04-30T02:00:00Z' | b'ABQ' | 35.049 | -106.620 | NaN | 8.0 | NaN | 10.0 | NaN | 1206.0 | 1007.60004 | NaN | -3.300000 | NaN | 21.7 | 29.920000 | 170.0 | 2006.0 |

| 2 | b'2024-04-30T02:00:00Z' | b'AEX' | 31.329 | -92.550 | NaN | 6.0 | NaN | 10.0 | NaN | 1.0 | 1013.20000 | NaN | 17.800001 | NaN | 18.9 | 29.910000 | 150.0 | NaN |

| 3 | b'2024-04-30T02:00:00Z' | b'ALB' | 42.750 | -73.800 | NaN | 4.0 | NaN | 10.0 | NaN | 2503.0 | 1017.40000 | NaN | 4.400000 | NaN | 13.3 | 30.039999 | 360.0 | NaN |

| 4 | b'2024-04-30T02:00:00Z' | b'ANJ' | 46.479 | -84.370 | NaN | 7.0 | NaN | 10.0 | NaN | 84.0 | 1010.30000 | NaN | 4.400000 | NaN | 5.6 | 29.830000 | 100.0 | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 179 | b'2024-04-30T01:00:00Z' | b'YXE' | 52.169 | -106.680 | 9.0 | 14.0 | 1.0 | 15.0 | NaN | 963.0 | 1002.00000 | NaN | 2.000000 | 0.0 | 16.0 | 29.550000 | 300.0 | 2603.0 |

| 180 | b'2024-04-30T01:00:00Z' | b'YXH' | 50.020 | -110.720 | 1.0 | 14.0 | 0.0 | 30.0 | NaN | 1103.0 | 1004.30000 | NaN | 1.000000 | 0.0 | 14.0 | 29.630000 | 340.0 | NaN |

| 181 | b'2024-04-30T01:00:00Z' | b'YYC' | 51.119 | -114.019 | 5.0 | 6.0 | 0.0 | 6.0 | NaN | 403.0 | 1008.50000 | 51.0 | 0.000000 | 5.0 | 6.0 | 29.699999 | 90.0 | 804.0 |

| 182 | b'2024-04-30T01:00:00Z' | b'YYN' | 50.279 | -107.680 | NaN | 16.0 | NaN | 9.0 | NaN | 1.0 | 1003.30000 | NaN | -7.000000 | NaN | 15.0 | 29.599998 | 270.0 | NaN |

| 183 | b'2024-04-30T01:00:00Z' | b'YYZ' | 43.669 | -79.599 | 0.0 | 8.0 | 0.0 | 15.0 | NaN | 703.0 | 1013.70000 | NaN | 8.000000 | 5.0 | 11.0 | 29.920000 | 100.0 | 1004.0 |

184 rows × 18 columns

Step 3d: Process Surface Observations ¶

Top

Read in several of the columns; assign / convert units as necessary; convert GEMPAK cloud cover and present wx symbols to MetPy’s representation¶

lats = data['latitude']

lons = data['longitude']

tair = (data['TMPC'] * units ('degC')).to('degF')

dewp = (data['DWPC'] * units ('degC')).to('degF')

altm = (data['ALTI'] * units('inHg')).to('mbar')

slp = data['PMSL'] * units('hPa')

# Convert wind to components

u, v = wind_components(data['SKNT'] * units.knots, data['DRCT'] * units.degree)

# replace missing wx codes or those >= 100 with 0, and convert to MetPy's present weather code

wnum = (np.nan_to_num(data['WNUM'],True).astype(int))

convert_wnum (wnum)

# Need to handle missing (NaN) and convert to proper code

chc1 = (np.nan_to_num(data['CHC1'],True).astype(int))

chc2 = (np.nan_to_num(data['CHC2'],True).astype(int))

chc3 = (np.nan_to_num(data['CHC3'],True).astype(int))

cloud_cover = calc_clouds(chc1, chc2, chc3)

# For some reason station id's come back as bytes instead of strings. This line converts the array of station id's into an array of strings.

stid = np.array([s.decode() for s in data['station']])

The next step deals with the removal of overlapping stations, using reduce_point_density. This returns a mask we can apply to data to filter the points.

# Project points so that we're filtering based on the way the stations are laid out on the map

proj = ccrs.Stereographic(central_longitude=cLon, central_latitude=cLat)

xy = proj.transform_points(ccrs.PlateCarree(), lons, lats)

# Reduce point density so that there's only one point within a circle whose distance is specified in meters.

# This value will need to change depending on how large of an area you are plotting.

density = 150000

mask = reduce_point_density(xy, density)

Step 3e: Visualize Surface Observations ¶

Top

Simple station plotting using plot methods¶

One way to create station plots with MetPy is to create an instance of StationPlot and call various plot methods, like plot_parameter, to plot arrays of data at locations relative to the center point.

In addition to plotting values, StationPlot has support for plotting text strings, symbols, and plotting values using custom formatting.

Plotting symbols involves mapping integer values to various custom font glyphs in our custom weather symbols font. MetPy provides mappings for converting WMO codes to their appropriate symbol. The sky_cover and current_weather functions below are two such mappings.

Now we just plot with arr[mask] for every arr of data we use in plotting.

# Set up a plot with map features

# First set dpi ("dots per inch") - higher values will give us a less pixelated final figure.

dpi = 125

fig = plt.figure(figsize=(15,10), dpi=dpi)

proj = ccrs.Stereographic(central_longitude=cLon, central_latitude=cLat)

ax = fig.add_subplot(1, 1, 1, projection=proj)

ax.set_facecolor(cfeature.COLORS['water'])

land_mask = cfeature.NaturalEarthFeature('physical', 'land', '50m',

edgecolor='face',

facecolor=cfeature.COLORS['land'])

lake_mask = cfeature.NaturalEarthFeature('physical', 'lakes', '50m',

edgecolor='face',

facecolor=cfeature.COLORS['water'])

state_borders = cfeature.NaturalEarthFeature(category='cultural', name='admin_1_states_provinces_lakes',

scale='50m', facecolor='none')

ax.add_feature(land_mask)

ax.add_feature(lake_mask)

ax.add_feature(state_borders, linestyle='solid', edgecolor='black')

# Slightly reduce the extent of the map as compared to the subsetted data region; this helps eliminate data from being plotted beyond the frame of the map

ax.set_extent ((lonW+2,lonE-4,latS+1,latN-2), crs=ccrs.PlateCarree())

ax.set_extent ((lonW+0.2,lonE-0.2,latS+.1,latN-.1), crs=ccrs.PlateCarree())

#If we wanted to add grid lines to our plot:

#ax.gridlines()

# Create a station plot pointing to an Axes to draw on as well as the location of points

stationplot = StationPlot(ax, lons[mask], lats[mask], transform=ccrs.PlateCarree(),

fontsize=8)

stationplot.plot_parameter('NW', tair[mask], color='red', fontsize=10)

stationplot.plot_parameter('SW', dewp[mask], color='darkgreen', fontsize=10)

# Below, we are using a custom formatter to control how the sea-level pressure

# values are plotted. This uses the standard trailing 3-digits of the pressure value

# in tenths of millibars.

stationplot.plot_parameter('NE', slp[mask], color='purple', formatter=lambda v: format(10 * v, '.0f')[-3:])

stationplot.plot_symbol('C', cloud_cover[mask], sky_cover)

stationplot.plot_symbol('W', wnum[mask], current_weather,color='blue',fontsize=12)

stationplot.plot_text((2, 0),stid[mask], color='gray')

#zorder - Higher value zorder will plot the variable on top of lower value zorder. This is necessary for wind barbs to appear. Default is 1.

stationplot.plot_barb(u[mask], v[mask],zorder=2)

plotTitle = (f"Sfc Map valid at: {timeStr}")

ax.set_title (plotTitle);

# In order to see the entire figure, type the name of the figure object below.

fig

figName = (f'{timeStr2}_sfmap.png')

fig.savefig(figName)